Hand Tracker

Stereo hand tracker with multi-CNN prediction and triplane fusion.

This project was done with another classmate as part of Professor Shenlong Wang’s UIUC CS 498, Machine Perception course. Tracking hands in real-time is a surprisingly difficult problem, especially if you don’t want to add additional hardware (e.g., active depth sensors, etc.). Our proposed solution was a combination of two papers:

- “3D Hand Pose Tracking and Estimation Using Stereo Matching” for hand segmentation and depth estimation,

- “Robust 3D Hand Pose Estimation in Single Depth Images: from Single-View CNN to Multi-View CNNs” for joint estimation.

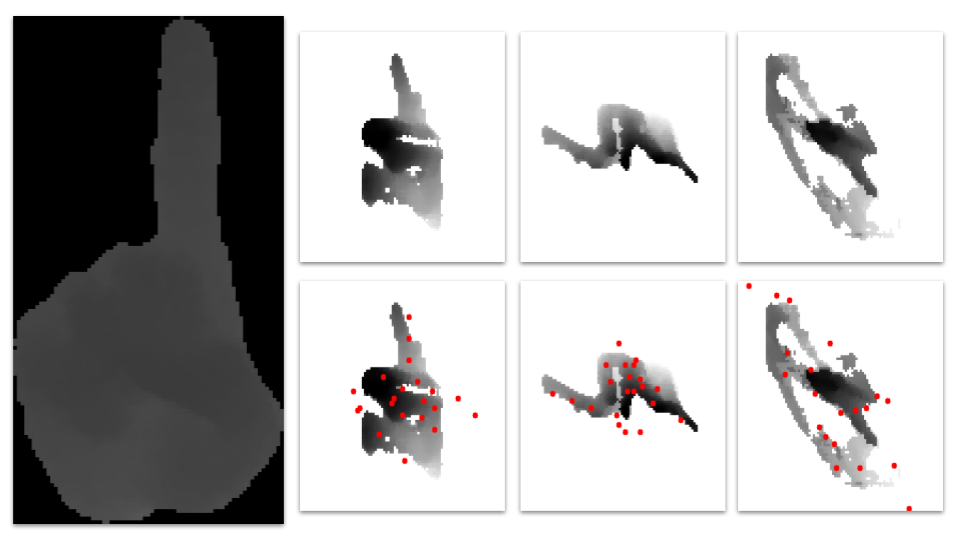

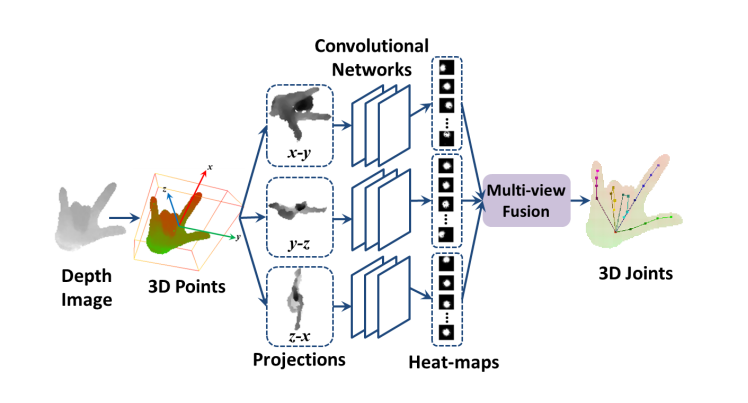

I helped a bit with (1) for hand segmentation, but my main contribution was re-implementing (2). The general idea of this is to use the estimated hand depths, reproject them into 3D space, use PCA to extract a bounding box, and then project them onto the three bounding box planes.

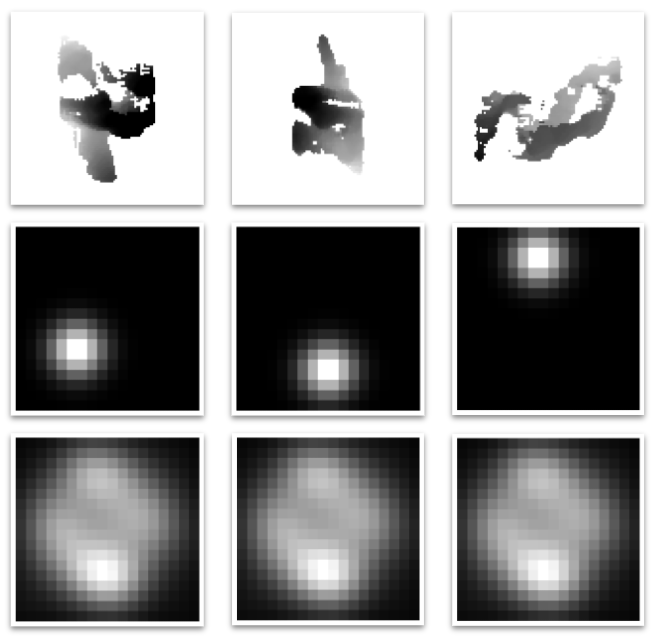

After extracting the triplane projections, we then run a multi-CNN on each projection to extract heatmaps of all 21 hand joints.

The heatmaps, which indicate the confidence of where a joint might be, are fused back with each planar projection to output a final prediction. This sounds great in theory because we get to manually extract a bit more information from just raw depth images, but in practice, we ran into a lot of issues similar to mode collapse.

Unfortunately, we ran out of time to fully fix this issue and put everything in an end-to-end pipeline (the PCA and projection was done in C++, the multi-CNN was done in PyTorch, and the triplane fusion was done in C++ again). However, we did find that the average error on our test set was only around 51 millimeters, which might be acceptable depending on the use case.

All of our code can be found on GitHub. Although I don’t plan on revisiting this specific project in the near future, I still think it’s still interesting to explore how triplanes are used for 3D understanding (they seem to have some use in the area of 3D content generation as well, through GANs and diffusion models).